|

Haoge Deng I am a PhD student jointly supervised by the Institute of Automation, Chinese Academy of Sciences (CASIA), and Beijing Academy of Artificial Intelligence (BAAI), under the supervision of Prof Zhaoxiang Zhang and Dr. Xinlong Wang. I obtained my MSc degree at BUPT in China, supervised by Prof. Yonggang Qi. I also received my Bachelor's degree in Electronics Information Science and Technology from BUPT in 2022. My research interests include generative models , with a particular focus on multimodal generation . |

|

ResearchRepresentative papers are highlighted. * indicates equal contribution, # indicates corresponding author. |

|

Emu3.5: Native Multimodal Models are World Learners

BAAI [arxiv] | [project page] | [code] Emu3.5 is a natively multimodal world model that unifies vision and language through end-to-end next-token prediction on interleaved video-derived data, enhanced by reinforcement learning and DiDA-based parallel decoding for efficient, spatiotemporally consistent generation. |

|

Uniform Discrete Diffusion with Metric Path for Video Generation

Haoge Deng*, Ting Pan*, Fan Zhang*, Yang Liu*, Zhuoyan Luo, Yufeng Cui, Wenxuan Wang, Chunhua Shen, Shiguang Shan, Zhaoxiang Zhang#, Xinlong Wang# International Conference on Learning Representations (ICLR, TH-CPL A), 2026 [arxiv] | [project page] | [code] | [hugging face 🤗 daily papers] URSA is a simple yet powerful discrete framework that formulates video generation as an iterative process of global refinement over spatiotemporal tokens, enabling efficient scaling to long-duration videos. |

|

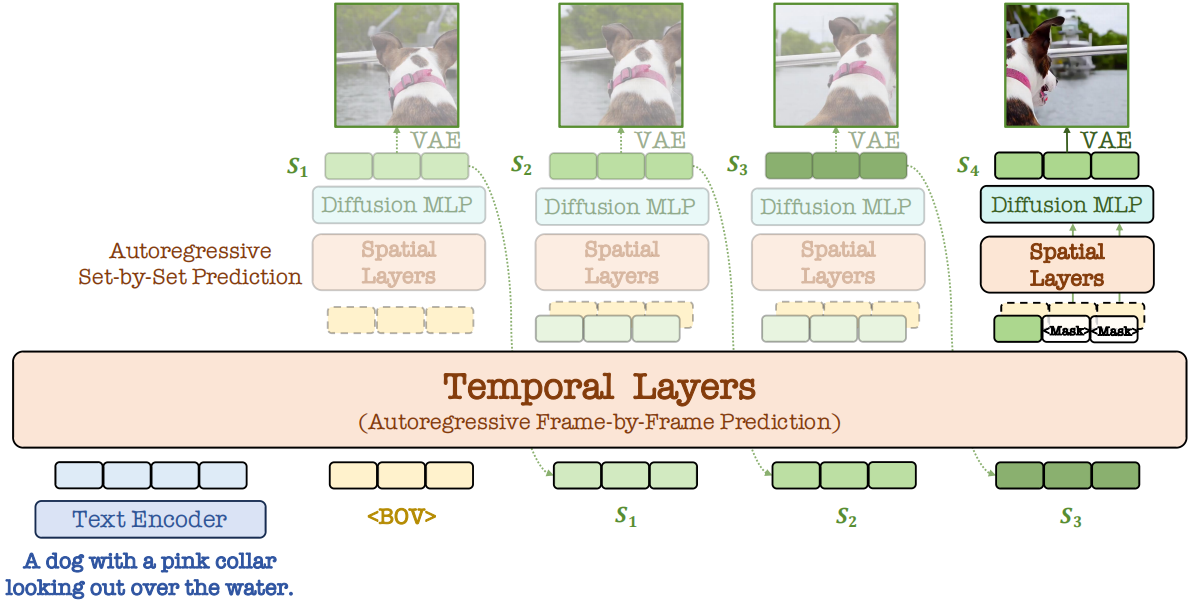

Autoregressive Video Generation without Vector Quantization

Haoge Deng*, Ting Pan*, Haiwen Diao*, Zhengxiong Luo*, Yufeng Cui, Huchuan Lu, Shiguang Shan, Yonggang Qi#, Xinlong Wang# International Conference on Learning Representations (ICLR, TH-CPL A), 2025 [arxiv] | [project page] | [code] | [openreview] | [post] | [iclr poster] [hugging face 🤗 daily papers] NOVA is a non-quantized autoregressive model that enables efficient video generation by reformulating the video creation as frame-by-frame and set-by-set predictions. |

|

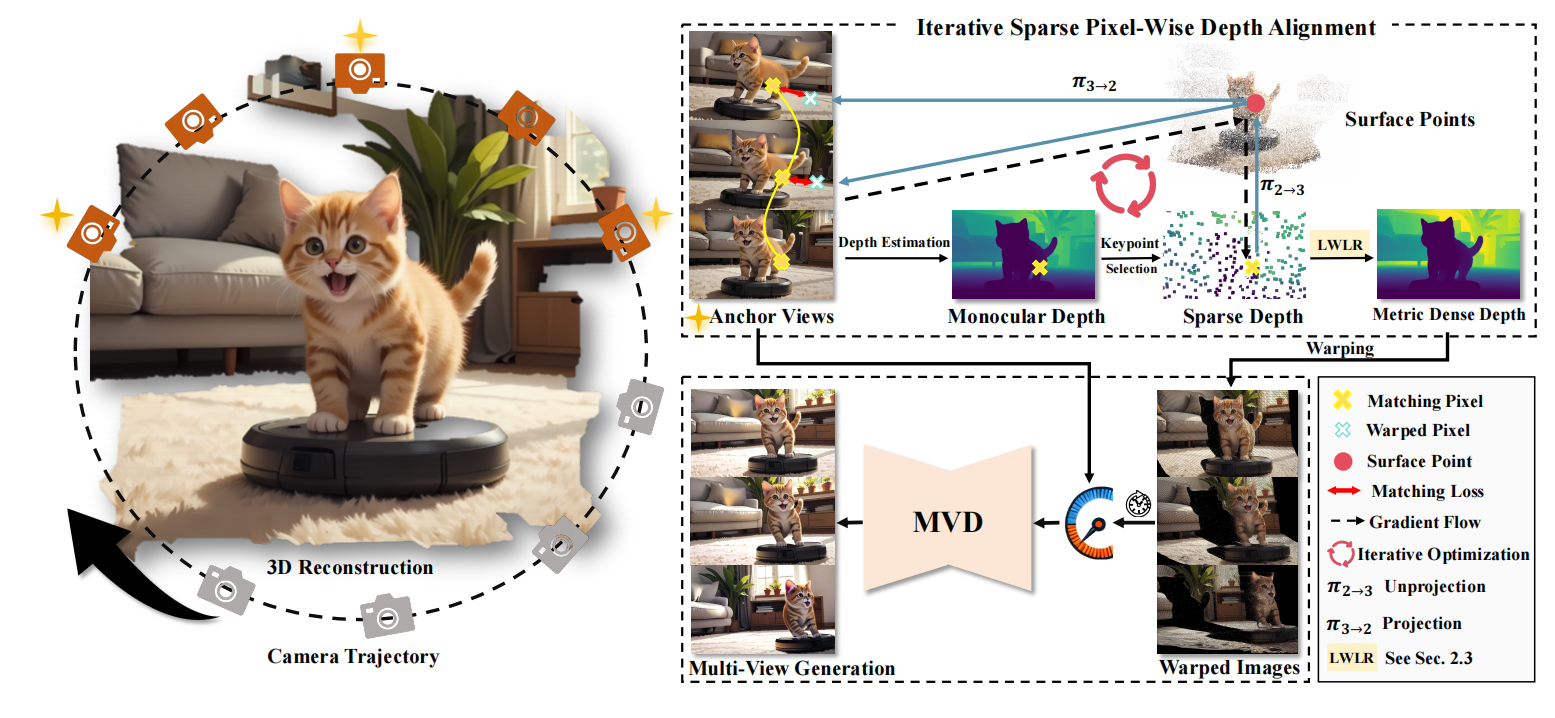

You See it, You Got it: Learning 3D Creation on Pose-Free Videos at

Scale

Baorui Ma*, Huachen Gao*, Haoge Deng*, Zhengxiong Luo, Tiejun Huang, Lulu Tang#, Xinlong Wang# IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR, CCF-A), 2025 (Highlights, ~3% acceptance rate) [arxiv] | [project page] | [code] | [dataset] | [post] | [hugging face 🤗 daily papers] See3D is a scalable visual-conditional MVD model for open-world 3D creation, which can be trained on web-scale video collections without camera pose annotations. |

|

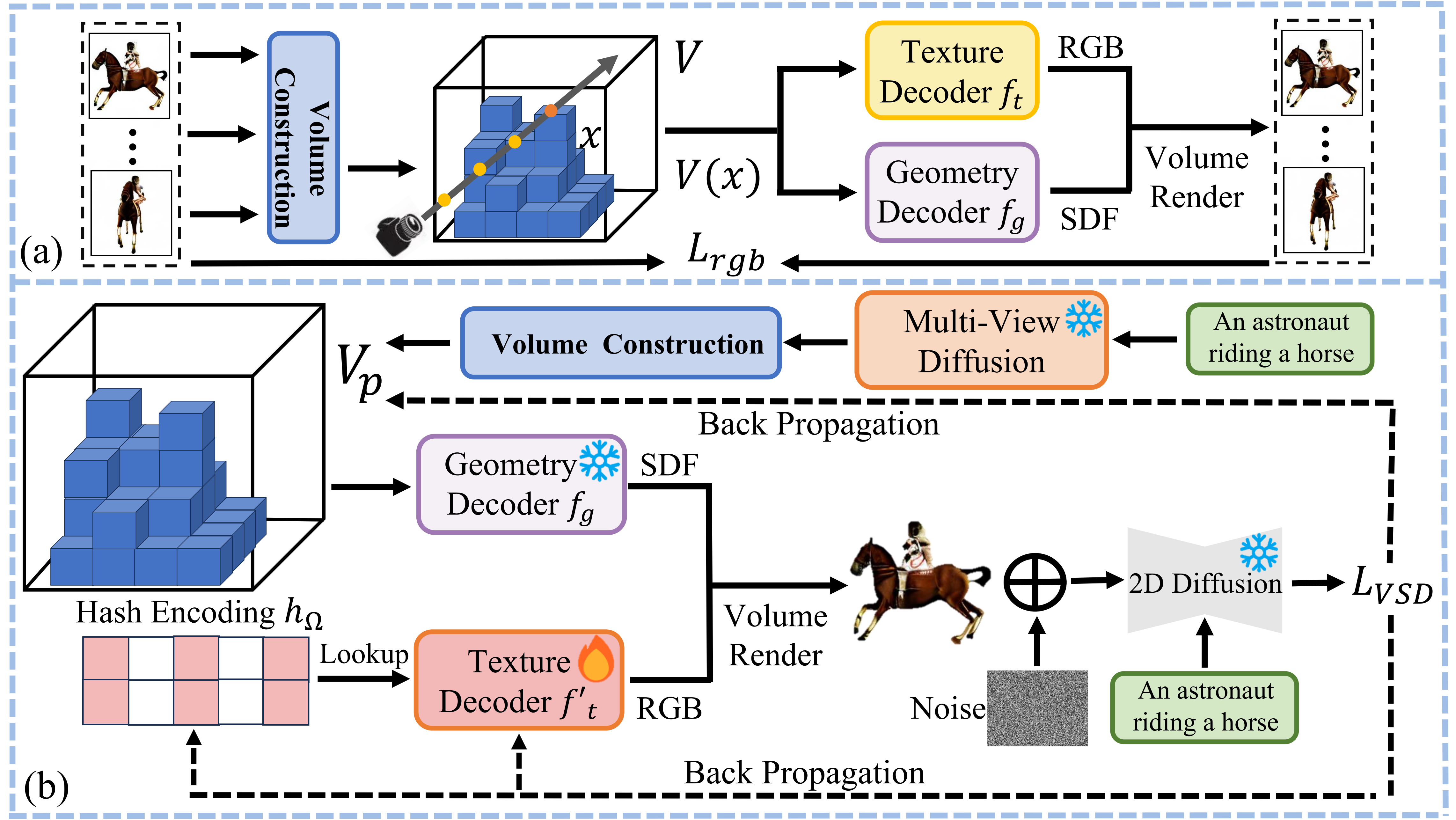

GeoDream: Disentangling 2D and Geometric Priors for High-Fidelity and

Consistent 3D Generation

Baorui Ma*, Haoge Deng*, Junsheng Zhou , Yu-Shen Liu, Tiejun Huang, Xinlong Wang# arXiv, 2023 [arXiv] | [project page] | [code] GeoDream is a 3D generation method that integrates explicit generalized 3D priors with 2D diffusion priors to enhance the capability of obtaining unambiguous 3D consistent geometric structures without sacrificing diversity or fidelity. |

|

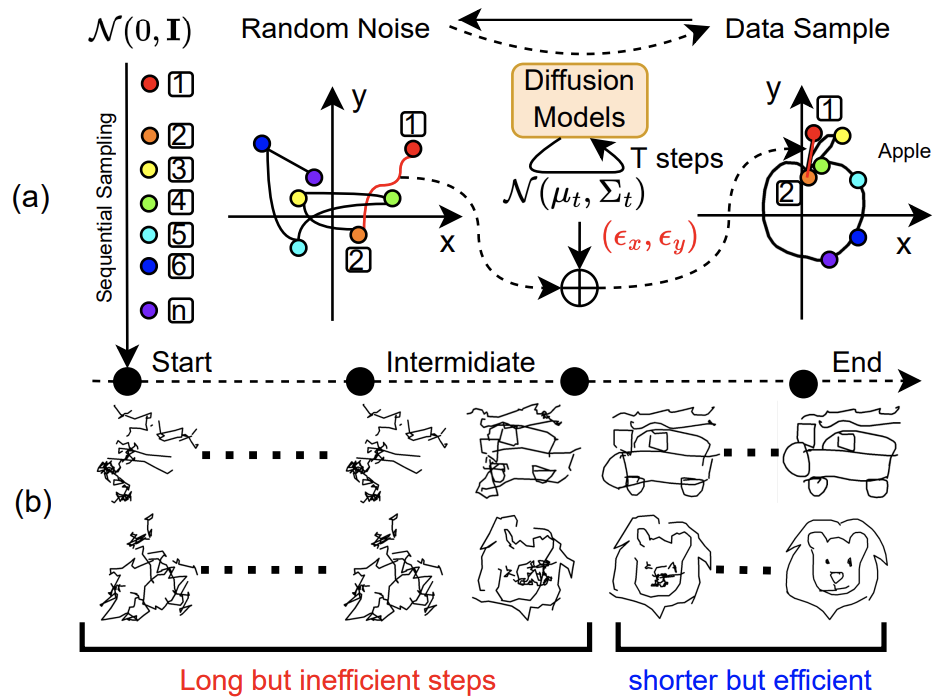

SketchKnitter: Vectorized Sketch Generation with Diffusion Models

Qiang Wang, Haoge Deng, Yonggang Qi#, Da Li, Yi-Zhe Song, International Conference on Learning Representations (ICLR, TH-CPL A), 2023 (Spotlight, ~5% acceptance rate) [paper] | [openreview] | [code] SketchKnitter is a method that achieves vectorized sketch generation by reversing the stroke deformation process using a diffusion model learned from real sketches, enabling the creation of higher quality, visually appealing sketches with fewer sampling steps. |

Education |

|

Ph.D. in Pattern Recognition and Intelligent Systems under the supervision of Prof Zhaoxiang Zhang and Dr. Xinlong Wang Sept. 2025 ~ July. 2028(Expected) |

|

Master of Science in Artificial Intelligence Under the supervision of Prof. Yonggang Qi Sept. 2022 ~ July. 2025 |

|

Bachelor of Engineering in Electronics Engineering Outstanding Graduate of Beijing Province Sept. 2018 ~ July. 2022 |

Experiences |

|

Research Intern at BAAI-Vision Research on Video generation Advised by Dr. Zhengxiong Luo and Dr. Xinlong Wang Jun. 2024 ~ Now |

|

|

Research Intern at BAAI-Vision Research on 3D content generation Advised by Dr. Baorui Ma and Dr. Xinlong Wang Jun. 2023 ~ Jun. 2024 |

|

|

Research Intern at Meituan-AutoML Research on Lidar lidar range generation Advised by Dr. Zhi Tian and Dr. Xiangxiang Chu Jan. 2023 ~ Jun. 2023 |

Academic Services

|

|

|